Dune 2: Life of Brian with Space Mexicans

It’s funny I thought the first one was not a good movie, but at least it wasn’t bad. This one is actively bad. In the interests of saying something nice about it: the sets looked cool, especially Harkonnen land. Everything else about it: it is space version of “Life of Brian.” I guess the first one was a tragedy, this one is a comedy and the last one will be a farce.

Feyd Rautha: I get it, dude’s supposed to be bad. When you make a character go around just cutting people’s throats for no apparent reason, he goes from le bad to le cheesebag comic book character. Why not give him a moustache he can twirl while we’re at it? Sting isn’t a good actor, but he was directed better, and so his portrayal wins. Also him imitating his titular uncle’s voice was cringe, as was his acting like a man of honor (as opposed to a cartoon of a psycho) in the end.

Stilgar: still can’t decide if he’s mexican or ostjuden rag merchant. Worse than that, he’s an active grovelling dumbass in this movie. The book character, and Lynch’s stiff portrayal of him had nobility. This one, he’s a groveling and bumbling bugman; a character not from Dune but “Life of Brian.”

I can get it for you wholesale vato

Fremen: acted like a bunch of filthy imbecile peasants rather than noble desert warriors. The black ones disappeared somehow, perhaps genocided along with the Atreides, so in this one they were more like a bunch of tweaker space Mexicans. I kept expecting them to roll out in Chevy Impalas with hydraulic suspensions. There’s no sense of nobility or why they might be so badass: just a bunch of credulous, excitable ethnic numskulls with no self control who seem eager to follow some floppy haired pasty twink and his hippy mom for, like, reasons. The Fremen don’t even have burritos; they’re just groace slobby peasants who mysteriously have super fighting powers because it’s in the original source material (which actually explains why the Fremen were awesome). I was rooting for the Harkonnens in every scene they were in. They were mostly actively comical.

Sardaukar: The director made them interesting in the first part (they were just dudes in garbage bags in the Lynch Dune). Suddenly turned from fearsome warriors and the source of Imperial power into a bunch of incompetent and passive wimps quickly defeated by a bunch of excitable space Mexicans because the script didn’t want to deal with dramatic tension or, like, reasons.

Irulan: the actress portraying her gave a convincing performance as a middle aged American office worker, complete with skin glycation from excessive sugary starbucks consumption and speaking her lame thoughts about galactic HR policy out of her schnozz. The actresses name is also “Pugh” which sums up my reaction to her ‘orrible faaaaaace. Compare to Lynch version.

Holy shit this bitch is ugly

Chani: portrayed as a grouchy Gen-Z Americunt who has never experienced authentic genital quakes without the use of power tools and thinks her boyfriend (literal world conqueror and savior) is a retard because he can’t ring her bell. I guess this is supposed to be relatable, and probably is for most Americans under 40. Unlike the character in the book, who was a religious fanatic, this one is an atheist (and of course a heckin badass girl-boss). I guess she’s the main character in this movie; it would be fine if her dramatic range were a little wider than between frowning and “can I speak to the manager” tier frowning.

The Emperor of the Known Universe: Looks more like a homeless schizo muttering to himself while wearing a mumu. Walken’s character has no gravitas, intelligence or strength of will, making his defeat kind of ridiculous and obvious. Wow how surprising a bunch of bumbling Space Mexicans and a dumb kid defeated the schizo homeless guy: I was on the edge of my seat. More cowbell.

I’m emperor I can wear a dress and not brush my hair if I want to!

Lady Jessica: got facial tattoos for some reason. None of the other reverend mothers or Fremen got them. Otherwise the same dimwit portrayal as in the first one; maybe a little more bitchy. Stupid and still neurotic psychedelic witch, now with talkey embryo Northman-witch inside, not the most interesting character as in the book.

Paul: played the same way as in the first one; a Mary Sue phoning it in who’d rather be playing vidya. BTW he has zero chemistry with Zendaya, which is sort of hilarious and pathetic at the same time. They might as well be in different rooms in their “love scenes.” Their indifference was actively funny: it would have been better if they hated each other.

Rabban: was a little too retarded and comical, honestly. The whole movie would have been a million times better if Bautista played Paul.

Gurney: he displayed more elan in the first film; morphed into more of a janitor surf bum in this one.

Things missing from this movie:

- Explanation and world building; aka CHOAM and the Guild and the Mentats are MIA. The guild is what drives the actual story: they need the psychedelic worm vomit to drive their spaceships. Lynch understood this and had the good graces to tell the viewer about it.

- Dramatic tension: nobody knows why Paul doesn’t just go souf and take his destiny -his white friends are all saying “do it do it do it,” and he mostly gives the impression he’d rather play vidya, smoke spice and bang his animated menstrual cramp of a GF.

- Human emotion: nobody has a normal human emotion through the entire thing. Even the “love affair” doesn’t rise to the level of teenage boner tier human emotion.

- Jihad: as we know the Fremen were supposed to be Chechens, and so their holy wars are called “Jihad.” They don’t use the word in this adaptation; presumably for some fake and gay political reason. I have nothing against space mexicans, but their religious fervor was more comedy than scary.

- Acting: even hammy overacting would have been worth watching. Lame performances all around.

One of the most striking things is how ugly everyone in this movie is. While the backgrounds and sets look cool, there isn’t a single handsome man or pretty girl in the entire film. Everyone looks like a shambling bum, a grease stain or an office dwelling thing. I don’t think Zendaya is pretty, but she manages to look worse in this movie than in any other context. Looking at the list of producers: there needs to be more horny jewish guys working on things like this, and fewer middle aged women with empty egg cartons. It will be easier on everyone’s eyes, plus, this is traditional, from the times when Hollyweird could make watchable movies. It’s a movie: I want to be entertained and see unrealistically good looking movie stars. I can go get a job in NYC if I want to gawk at homely pre-diabeetus glycation-skin office workers, smelly mystery meats, skinnyfats in plastic suits and shambling schizoid bums.

The “music” is also considerably more irritating when listening on the computard instead of in an IMAX theater. “Hans Zimmer plays the whoopie cushion in 3-d sound” doesn’t work so well at home.

As I said earlier, Villeneuve is a sperdo. He is incapable of directing actual human beings or making a plot arc with characters that make any kind of sense. He should be some kind of special effects director and let someone with normal emotional range do the stuff he isn’t interested in.

The movie was more Life of Brian with post apocalypse Mexicans instead of an interpretation of the Dune book. Someone should recut it with Laurel and Hardy or Benny Hill music and a laugh track instead of Hans Zimmer playing the fart machine; it would make more sense.

The problem with tech journalism

I came across this nonsense on tech journalism recently:

A clodpated former reporter for WaPo, Vox and Ars Technica (Timothy B. Lee) attempts to lecture us on the problems with tech journalism. This is fun stuff as this Princeton-educated ninnyhammer doesn’t seem to be conversant in the English language terminology associated with his profession. Consider:

The first few paragraphs of a news story — known as the lead

It’s actually known as the “lede.” The word “lead” (which he misuses 5 times) is both the heavy metal and the information source reporters are supposed to chase down, not the opening text of an article on a subject.

One approach: Identify an emerging technology that has the potential to be the “next big thing.” …. Reporters pitching these stories to their editors have an obvious incentive to exaggerate the importance of the technology or company they are writing about. And once they’ve started work on a story, they have a strong incentive not to ask too many skeptical questions.

There’s a couple more reasons he never mentions for reporters rampant lack of skepticism in tech articles (or anything else). The most obvious one is that tech reporters are fairly low IQ people who are incapable of constructing any useful skepticism that would help anybody. This lack of intelligence also tends to bleed over into a general lack of confidence: when they look around and see everyone praising, say, quantum computing or autonomous vehicles, they of course wouldn’t dream of contradicting all those “smart people.” Hell they don’t even know the difference between a “lede” and a “lead” -how are they supposed to know when autonomous vehicle companies are faking it?

Beyond that there is the fact that there are 6 public relations professionals (aka professional business propagandists) for every reporter in the country. As I have said before, this ratio is even more unfavorable in tech reporting; if you include marketing people in with PR people, its probably 1000:1 against tech reporters. The ensuing human information centipede is the main reason why clowns like SBF and Elizabeth Holmes got as far as they did: the reporters were overwhelmed by this denial of reality attack by the PR propagandists. Many PR people take the obvious shortcut of simple bribery: I’ve been there when it happens. $20k is peanuts compared to a PR person’s salary, but you can get some pretty good stuff in print for that kind of loot. Tech reporters and their editors need money too. They also need coke and hookers -it is more plausibly deniable than stacks of cash changing hands, and more fun for the PR person.

Everyone just assumes this doesn’t apply to them

I’m sure it’s well beyond simple bribery. For example, nobody talks about the ebay stalking scandal any more, but I’m 100% sure this isn’t the only time this sort of thing has happened to reporters. The stakes in that case were much lower than most of the business we’re talking about, and it only involved a couple of obscure bloggers grousing. It is of course a lot easier to ruin an employed reporter’s life than a couple of self employed bloggers.

Conversely, reporters love to catch big companies doing something illegal, unethical, or anticompetitive.

Untrue: contemporary reporters mostly love nonsense “gotcha” moral preening baloney against perceived enemies in the endless culture wars. The example Lee correctly mentions is a vacuous but negative article about Uber in 2014 being not very helpful to anyone’s understanding. What he forgets to mention is why numskull “reporters” hated Uber back then. Uber was the enemy in 2014 because its founder once called it “Boober” as a weak nerd joke in a GQ interview. This means he was probably a normal male of the human species rather than the type of castrato favored by the present regime and its reporter toadies, but it was enough to spin up the hate machine. Since the chowderhead who wrote a negative article about Uber doing normal business things couldn’t get in on the “Boober” scandal a few months earlier, he did the next best thing: he made up a bunch of self righteous but nebulous indignation about how they conduct their business. Later on Boober got into trouble for not buying the wife of another fraudster a leather jacket in a size which was flattering to her figure while she was employed there. She’s now a tech reporter, water finding its level no doubt, but she did manage to get Uber’s founder fired, ultimately, for saying “Boober” in an interview back in February of 2014.

Oh noes boober-sayer, wouldn’t you rather hear from Zuck instead?

And now, curiously …. nobody seems to dislike Uber. I never cared that Travis said “boober” or didn’t buy Susan Fowler a more flattering size of jacket, though I could see NPCs around me who really cared a lot about these things. I only started using Uber in Europe around 2021 because they’re usually better than taking the taxi. The NPCs don’t seem to mind them any more either. I bet Uber has done plenty of actually shitty things one could report on, but nobody does because its present CEO is some Iranian sleazebag who used to work for sleazebag gangster Barry Diller, rather than a goofy nerd boy who says things like “boober.”

Contra Lee’s assertions, corporations are mostly accorded ridiculous leeway in all mainstream reporting despite overt and rampant criminality at almost all levels of American society. This apparently happens because they ape platitudes which make NPCs happy. That, PR disinfo, bribery and darker means: I’m pretty sure anyone at risk of surfacing something shitty regarding present Uber would lose their jobs or worse. Diller is mostly a gangster as far as I can tell, and I assume his protegee is no better. If going after gangsters is too scary for you: simply explaining in a couple of paragraphs any of the half dozen criminal cartels that make up the US health care system is a socially useful thing to do: no mainstream publication has ever done this to my knowledge. Nobody talks about chemical companies either: how the fuck do chemical companies get good press? Ya, you know it: PR and bribery (and worse). Hell even arms manufacturers get decent press now a days, after all they’re into diversity and inclusion while their products blow up middle eastern and eastern European subhumans who obviously deserve it for being insufficiently diverse and inclusive.

we make shit that kills people, but we like the black people!

The awkward reality is that tech journalism is the way it is because that’s mostly what consumers want.

Nah, there are plenty of people who would prefer adult tech journalism. They’re not going to pay for a Princeton educated blockhead who doesn’t know the difference between “lede” and “lead” to regurgitate tech PR platitudes at them. Lots of people would like to know what’s actually going on in the technology world, if only to work at a company or on a problem likely to succeed. Lots of these people have money. Investor types don’t care so much these days: using the magic of public relations they more or less create their own realities, but there are middling rich people who want to know. Generally people who want to figure out the world don’t rely on clowns LARPing as smokestack-and-telegraph era reporters, they form closed communities or rely on people like me who do boob dissection for sport rather than as vocation. All reporters prefer the central model as they think it accords them some status, but it doesn’t: not any more. To actually report things you have to remove the letter P and the letter R from the truth, and these days, official “journalism” is just a cloaca for pumping PR propaganda lizard shit into people’s brains.

Saving the world and “passion” is bullshit

There are two things I hear from tech people which really, really harsh on my mellow. One is that you should follow your passions, and the other is that your passion has something to do with saving the world or making the world a better place.

You work to make money, not make the world a better place. Making the world “a better place” may be a side effect of your business (it’s arguably a side effect of most businesses outside of private equity and internet advertising), it might be a brand for your business, but if it’s what defines your business, your business will probably fail because you’re not thinking about business, you’re thinking about something else. For example, the Davos-spawn company “Better Place.” It was obvious on inspection this would fail, and even if it succeeded via regulatory capture it would make the world more dystopian. Electric cars continue to be a stupid idea for general use. There simply ain’t enough electricity in the fragile power grid, and most of it comes from fossil fuels, so converting automobile use to electrical is out of the question. Putting that aside, using exchangeable battery packs owned by some giant firm is both shady, a liability nightmare and insane. They burned a billion dollars and shipped batteries for 1400 cars which ended up being useless without a company to support them.

There was no business case for “Better Place.” There was no physical or economic need for this complex and ridiculous scheme. This wouldn’t have helped the environment at all: it is impossible for this hare-brained scheme to do so for simple thermodynamics reasons. But it was something that caught the imagination of people who wanted to make the world a better place. You could have made the world a better place working at SpaceX or even Google. If you like electric cars, Tesla made numerous real breakthroughs, and was relentless about making money (IMO their cars suck). You could also have made the world a better place by piling up giant heaps of money and donating it to worthy causes. Or if you’re incapable of this, you could volunteer at the soup kitchen, or help bums kick their drug habits: that is actually helping, rather than nerd fantasies about saving the planet like Mr. Spock. Nebulous pieties and bullshit do not make the world a better place. Get your nebulous pieties from religion like a normal human being; your personal vision of “making the world a better place” is almost certainly false because you’re a dumb monkey more susceptible to mass media programming with obvious falsehoods than in any other era of human history.

Muh passion

You can have fun at work, you should be interested in your work and lowering the entropy of the universe, and you can do work that gives you energy and satisfaction if you’re lucky, you can even be obsessed with work, but you should not be passionate about your work. Save that for the bedroom. That’s a nostrum, as Scott Adams put it, that successful people use: “follow your passions.” It’s a distraction from how they became successful, which is ruthless competition, fierce struggle, hard work and luck. Also because successful people are generally luckier, smarter, better looking, were harder working than their competitors and may have been born with other advantages. Nobody wants to hear that. They want to hear some happy bullshit about following your passions. I might believe that Oligarch Bezos was passionate about selling crap on the internet at some point: it’s easy to be passionate when you’re winning. Lots of people followed their passions into the poor house. His biggest success was EC2 API shit, which I 100% guarantee was nerd rage (or admiration) at how service APIs worked at DE Shaw, rather than any passionate desire to build really modular service APIs.

Imagine investing with someone who is actually following their passions! Adams uses the example of the sports memorabilia nerd opening a sports memorabilia shop being a terrible investment. Let’s make it more obvious: would you invest in a coke dealer who is passionate about high quality cocaine? Would you invest in a brothel run by a sex addict? You want to invest in someone that wants to make money, has a clear path to doing so, and has the drive and talent to pull it off. Dental practices are good investments; nobody is passionate about dentistry, but everyone loves money and healthy teeth. People with 7 kids to feed are good investments. People working on their passions as avocation are numskulls.

Just following my passions

I get this silly happy talk from Silicon Valley dunderheads and their victims all the time. People ask me about my passions as if that’s what motivates me. I’ve helped a few things that people were doing as passion projects (which made the world a better place even). Nobody made money on these projects, and whatever good my efforts did for them would have been better spent making money and donating it to charity, or simply giving it to a street bum who will use it on drugs. This sort of thing doesn’t go anywhere: actually selling things would have been better for the state of the human race.

These sorts of things are American Upper-Middle class nostrums. Like all American Upper-Middle class nostrums, they’ve confounded concepts of personal success, making money and status anxiety. The UMC is ridiculously status sensitive in a way other social classes are not, because they realize their position is precarious, and are terrified of being normal schlubs; this is one of the things which makes them so ridiculous. They tell themselves nonsense like this to grub up the status pole with their wives boyfriends. It’s rubbish and everyone should laugh this sort of nonsense to scorn.

If you want to get all weeb about it, the Japanese have a concept called Ikigai. As far as I can tell it’s their version of what Aristotle talks about in his various Moralia, which nobody reads any more, but everyone should. I think this concept is what silly con valley dorks are reaching for when they say they follow their passion or save the world or whatever, but because they’re using different words, and people don’t parse them right. Stupid upper middle class American class-neurotic nostrums: these are just confusion.

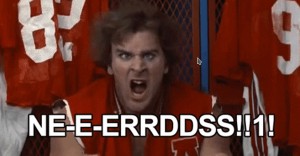

Against the nerds

One of the delusions of modern times is that we need a nerd clerisy to help us run things. We’re presently at the end of the post-WW-2 order (or the post-broadcasting order), sort of nervously contemplating what happens next. There has been an active clerisy of nerdoids in place since the 1930s before the war: FDR implemented this idea of a nerdoid clerisy in its current form. Herbert Hoover offered a different nerdoid clerisy -he was an excellent engineer and administrator and was more effective than what replaced him. FDRs nerds were arguably a failure from the start: FDR’s clerisy put the “Great” in “Great Depression.”

You know who didn’t have a Great Depression? Knuckle dragging anti-intellectual fascisti, that’s who: people who were sans clerisy. Literal beer hall philosophers. The US clerisy did take credit for winning WW-2. Whether or not they did anything useful is questionable: the Russians did most of the actual fighting. The US had the foresight to build nukes and ramp up military production to help the Russians kill our enemies (and themselves -an important unspoken goal of WW2) for us. Some of this plan was executed by various kinds of bureaucrat-nerd, but few to none of the important decisions were made by such people, who probably supported the communists on principle. Nukes would have been built without Oppenheimer, but probably wouldn’t have without the mostly unsung Leslie Groves who did all of the important leadership work, including hiring Oppenheimer.

I bet Groves gave Oppie noogies when out of range of the cameras: the weak should fear the strong

Groves was 0% nerd race; he was an Army engineer, a type of cultured thug who has existed since the late Stone Age. He had zero tolerance for nerdoid bullshit, and you can see how hard he mogs Oppie in the photo above. Groves is the type of man leaders have relied on for all of human history, and quite a few centuries before. Groves, to put it in American terms, was more of a Captain of the Football team than he was a nerd. The same can be said of other technical work done in Radar. Nerds had little to do with American victory in a “calling the shots” sense. They helped: but only because they were told what to do and kept under strict control by the Captain of the Football team. Subsequently, nerds and their bureaucracies flourished in the US, essentially cargo-culting what happened in WW-2, leaving out the all-important urgency and accountability to the Captain of the Football team who mercilessly bullycided them into producing results on a timeline, as is correct and proper.

After American victory, nerds proliferated like cockroaches, and to this proliferation was attributed a lot of the postwar American financial and industrial dominance. American financial and industrial dominance was more readily attributed to the fact that it held the world’s gold and the only functioning factories that hadn’t been bombed to cinders. The proliferation of nerds and nerd institutions was a result of prosperity; not a driver of it. You can make new nerds more easily than we do now should we happen to need more; the sciences did better when a Ph.D. was unnecessary or a brief apprenticeship. This compared to the present system where science nerds aren’t even paper productive almost until their 30s, and are often still kissing ass and publishing bullshit papers to get tenure in their 40s.

A historical example of astounding governmental success: the East India company (the US was modeled after it; the flag anyway). None of the men in it were nerds. All of them were Leslie Grove types. British gentlemen, while often superbly educated in the classics and in technical fields, were not nerds. The British elite were known by continentals to be anti-intellectual. Then you go look at the situation where nerds run everything: Wiemar Germany, current year, any random 1000 years of shitty Chinese history, peak Gosplan Soviet times. Nerd leadership isn’t good. Nerds belong in the laboratory. If they’re not in the laboratory they should be bullycided. Even when in the lab they need to be held accountable for producing good results; nerds will always tell you some bullshit story about their fuckups. That includes bureaucrat nerds pushing bits and paper. Do something useful with matter or GTFO. Nerds like Robert McNamera come up with failson do-everything products like the F-111, the golden dodo-bird of its time. Not-nerds who put their ass on the line like John Boyd come up with the F-16; after almost 40 years, still the backbone of Western air forces.

The same is true in tech leadership. Most of the leaders of nerds who matter are not really nerds, even if they fake it for the troops. Zuck does Brazool Jiu Jitsu and kills goats: he ain’t doing leetcode pull requests. Elon was a street fighter before he developed his interest in payment systems and rockets, and his personal life is more like Andrew Tates than that of a nerd. The nerds who founded google and kept it an engineering company in its early days hired a womanizing chad to make it a useful company, and speaking of Larry types, Larry Ellison is both a womanizing saleschad and lunatic jet pilot rather than a nerd. Look at the most prominent actual nerd entrepreneur in recent history: Sam Bankman Fiend. Archetypical nerd; he even worked at uber-nerdy Jane Street and had filthy sex orgies with other ugly nerdoids. Nerds need to be bullycided. It’s good for them, good for the organizations they work for.

Being intelligent isn’t the same as being a nerd. Though nerdism is touted as being a sort of definition of intelligence: it isn’t. Being a nerd is being a disembodied brain; a king of abstraction. Being a nerd is a lifestyle open to obvious stupidians. Even when they’re bright, nerds lack thumos; they have a hard time operating outside the nerd herd. If something is declared “stupid” the nerd won’t give it a second thought. If other nerds like a thing, or are declared “expert,” even the 200 IQ nerd will go along with it, because being a nerd is his identity. This is why the football star is superior to the nerd: his life isn’t made of abstractions -it’s made of winning, which is something that happens when you’re right, not when you do the proper nerd-correct thing to sit at the nerd table in high school. Right now there are probably a hundreds thousand nerds trying to predict the stock market with ChatGPT (aka autocomplete). That’s what a nerd does: acts on propaganda as if it is real information. Chad either exploits a bunch of ChatGPT specialists and flips it as a business to a greater fool, or invents a new branch of mathematics to beat the market the way Ed Thorp did.

Objectivity is another thing the nerd lacks. Nerds are masters of dogma. They’re good at putting dogma into their brains: that’s in one sense what “book learning” is -you have a sort of resonator in your noggin that easily latches into patterns. People who are good at tests are good at absorbing propaganda. They’re bad at noticing the thing they absorbed is propaganda; that takes another personality type. One that nerds associate with “stupid people” who bullied them in high school. You know, the ones who should be their bosses.

Nerds become in love with their ideas, even when they’re wrong. Architecture astronauts, mRNA enthusiasts, marxists and other schools of economics, diet loons, snake-oil pharmaceutical salesmen, “experts” in most fields -these are ideologies that people can’t course correct without losing face. Since being “smart” is all a nerd has, they stick with shitty ideas even unto their actual deaths. Actually intelligent people play with ideas, consider where they might be useful and where they might break down. Ideas are like wrenches; they’re not useful in every situation, and you have to pick the right one for the job. You have to put down the wrong wrench and pick a screwdriver sometimes. That’s why you need a General Groves to manage the nerds: your legions of shrieking nerd wrench-enthusiasts can be helpful in putting together a car, they need to be bullycided into not using a wrench to install rivets or screws. The other useful management technique is to pair them with machinists who will make fun of them for trying to use a wrench for everything: the China Lake approach.

It’s OK to be a nerd; nerds can serve a purpose. We can even admire the nerd if he’s actually capable of rational thought. It’s not OK to give nerds leadership positions. You need people who played sports or who killed people for a living, or otherwise interacted with matter and the real world. The cleric doesn’t order the warrior in a functioning society; it’s the other way around.

Examples of nerd failure:

https://gaiusbaltar.substack.com/p/why-is-the-west-so-weak-and-russia

https://collabfund.com/blog/the-dumber-side-of-smart-people/

7 comments