Neglected machine learning ideas

This post is inspired by the “metacademy” suggestions for “leveling up your machine learning.” They make some halfway decent suggestions for beginners. The problem is, these suggestions won’t give you a view of machine learning as a field; they’ll only teach you about the subjects of interest to authors of machine learning books, which is different. The level-3 and level-4 suggestions they make are not super useful either: they just reflect the tastes of the author.

The machine learning literature is vast, techniques are bewilderingly diverse, multidisciplinary and seemingly unrelated. It is extremely difficult to know what is important and useful. While “metacademy” has the horse sense to suggest reading some books, the problem is, there is no book which can even give you a survey of what is available, or make you aware of things which might be helpful. The best guide for the perplexed, in my not at all humble opinion, is Peter Flach’s introductory text, “Machine Learning: the Art and Science of Algorithms that Make Sense of Data” which at least mentions some of the more obscure techniques, and makes pointers to other resources. Most books are just a collection of the popular techniques. They all mention regression models, logistic regression, neural nets, trees, ensemble methods, graphical models and SVM type things. Most of the time, they don’t even bother telling you what each technique is actually good for, and when you should choose one over the other for an approach (Flach does; that’s one of many reasons you should read his book). Sometimes I am definitely just whining that people don’t pay enough attention to the things I find interesting, or that I don’t have a good book or review article on the topic. Sleep deprivation will do that to a man. Sometimes I am probably putting together things that have no clearly unifying feature, perhaps because they’re “not done yet.” I figure that’s OK, subjects such as “deep learning” are also a bunch of ideas that have no real unifying theme and aren’t done yet; this doesn’t stop people from writing good treatments of the subject. Perhaps my list is a “send me review articles and book suggestions” cry for help, but perhaps it is useful to others as an overview of neat things.

Stuff I think is egregiously neglected in books, and in academia in unranked semi-clustered listing below:

Online learning: not the “Khan academy” kind, the “exposing your learners to data, one piece at a time, the way the human brain works” kind. This is hugely important for “big data” and timeseries, but there are precious few ML texts which go beyond mentioning the existence of online learning in passing. Almost all textbooks concentrate on batch learning. Realistically, when you’re dealing with timeseries or very large data sets, you’re probably doing things online in some sense. If you’re not thinking about how you’re exposing your learners to sequentially generated data, you’re probably leaving information on the table, or overfitting to irrelevant data. I can think of zero books which are actually helpful here. Cesa-Bianchi and Lugosi wrote a very interesting book on some recent proofs for online learners and “universal prediction” which strike me as being of extreme importance, though this is a presentation of new ideas, rather than an exposition of established ones. Vowpal Wabbit is a useful and interesting piece of software with OK documentation, but there should be a book which takes you from online versions of linear regression (they exist! I can show you one!) to something like Vowpal Wabbit. Such a book does not exist. Hell, I am at a loss to think of a decent review article, and the subject is unfortunately un-googleable, thanks to the hype over the BFD of “watching lectures and taking tests over the freaking internets.” Please correct me if I am wrong: I’d love to have a good review article on the subject for my own purposes.

Reinforcement learning: a form of online learning which has become a field unto its own. One of the great triumphs of machine learning is teaching computers to win at Backgammon. This was done via a form of reinforcement learning known as TD-learning. Reinforcement learning is a large field, as it has been used with great success in control systems theory and robotics. The problem is, the guys who do reinforcement learning are generally in control systems theory and robotics, making the literature impenetrable to machine learning researchers and engineers. Something oriented towards non robotics problems would be nice (Sutton and Barto doesn’t suffice here; Norvig’s chapter is the best general treatment I have thus far seen). There are papers on applications of the idea to ideas which do not involve robots, but none which unify the ideas into something comprehensible and utile to a ML engineer.

“Compression” sequence prediction techniques: this is another form of online learning, though it can also be done in batch mode. We’re all familiar with this; when google tries to guess what you’re going to search for, it is using a primitive form of this called the Trie. Such ideas are related to standard compression techniques like LZW, and have deep roots in information theory and signal processing. Really, Claude Shannon wrote the first iterations of this idea. I can’t give you a good reference for this subject in general, though Ron Begleiter and friends wrote a very good paper on some classical compression learning implementations and their uses. I wrote an R wrapper for their Java lib if you want to fool around with their tool. Boris Ryabko and son have also written numerous interesting papers on the subject. Complearn is a presumably useful library which encapsulates some of these ideas, and is available everywhere Linux is sold. Some day I’ll expound on these ideas in more detail.

Time series oriented techniques in general: a large fraction of industry applications have a time component. Even in marketing problems dealing with survival techniques, there is a time component, and you should know about it.In situations where there are non-linear relationships in the time series, classical regression and time-series techniques will fail. In situations where you must discover the underlying non-linear model yourself, well, you’re in deep shit if you don’t know some time-series oriented machine learning techniques. There was much work done in the 80s and 90s on tools like recurrent ANNs and feedforward ANNs for starters, and there has been much work in this line since then. There are plenty of other useful tools and techniques. Once in a while someone will mention dynamic time warping in a book, but nobody seems real happy about this technique. Many books mention Hidden Markov Models, which are important, but they’re only useful when the data is at least semi-Markov, and you have some idea of how to characterize it as a sequence of well defined states. Even in this case, I daresay not even the natural language recognition textbooks are real helpful (though Rabiner and Juang is OK, it’s also over 20 years old). Similarly, there are no review papers treating this as a general problem. I guess we TS guys are too busy racking in the lindens to write one.

Conformal prediction: I will be surprised if anyone reading this has even heard of conformal prediction. There are no wikipedia entries. There is a website and a book. The concept is simple: it would be nice to well motivated put error bars on a machine learning prediction. If you read the basic books, stuff like k-fold cross validation and the jackknife trick are the entire story. OK, WTF do I do when my training is online? What do I do in the presence of different kinds of noise? Conformal prediction is a step towards this, and hopefully a theory of machine learning confidence intervals in general. It seems to mostly be the work of a small group of researchers who were influenced by Kolomogorov, but others are catching on. I’m interested. Not interested enough to write one, as of yet, but I’d sure like to play with one.

ML in the presence of lots of noise: The closest thing to a book on it is the bizarro (and awesomely cool) “Pattern Theory: The Stochastic Analysis of Real World Signals” by Mumford and Desolneux, or perhaps something in the corpus of speech recognition and image processing books. This isn’t exactly a cookbook or exposition, mind you: more of a thematic manifesto with a few applications. Obviously, signal processing has something to say about the subject, but what about learners which are designed to function usefully when we know that most of the data is noise? Fields such as natural language processing and image processing are effectively ML in the presence of lots of noise and confounding signal, but the solutions you will find in their textbooks are specifically oriented to the problems at hand. Once in a while something like vector quantization will be reused across fields, but it would be nice if we had an “elements of statistical learning in the presence of lots of noise” type book or review paper. Missing in action, and other than the specific subfields mentioned above, there are no research groups which study the problem as an engineering subject. New stuff is happening all the time; part of the success of “Deep Learning” is attributable to the Drop Out technique to prevent overfitting. Random forests could be seen as a technique which at genuflects at “ML in the presence of noise” without worrying about it too much. Marketing guys are definitely thinking about this. I know for a fact that there are very powerful learners for picking signal out of shitloads of noise: I’ve written some. It would have been a lot easier if somebody wrote a review paper on the topic. The available knowledge can certainly be systematized and popularized better than it has been.

Feature engineering: feature engineering is another topic which doesn’t seem to merit any review papers or books, or even chapters in books, but it is absolutely vital to ML success. Sometimes the features are obvious; sometimes not. Much of the success of machine learning is actually success in engineering features that a learner can understand. I daresay document classification would be awfully difficult without td-idf representation of document features. Latent Dirichlet allocation is a form of “graphical model” which works wonders on such data, but it wouldn’t do a thing without td-idf. [correction to this statement from Brendan below] Similarly, image processing has a bewildering variety of feature extraction algorithms which are of towering importance for that field; the SIFT descriptor, the GIST and HOG descriptors, the Hough transform, vector quantization, tangent distance [pdf link]. The Winner Take All hash [pdf link] is an extremely simple and related idea… it makes a man wonder if such ideas could be used in higher (or lower) dimensions. Most of these engineered features are histograms in some sense, but just saying “use a histogram” isn’t helpful. A review article or a book chapter on this sort of thing, thinking through the relationships of these ideas, and helping the practitioner to engineer new kinds of feature for broad problems would be great. Until then, it falls to the practitioner to figure all this crap out all by their lonesome.

Unsupervised and semi-supervised learning in general: almost all books, and even tools like R inherently assume that you are doing supervised learning, or else you’re doing something real simple, like hierarchical clustering, kmeans or PCA. In the presence of a good set of features, or an interesting set of data, unsupervised techniques can be very helpful. Such techniques may be crucial. They may even help you to engineer new features, or at least reduce the dimensionality of your data. Many interesting data sets are only possible to analyze using semi-supervised techniques; recommendation engines being an obvious beneficiary of such tricks. “Deep learning” is also connected with unsupervised and semi-supervised approaches. I am pretty sure the genomics community does a lot of work with this sort of thing for dimensionality reduction. Supposedly Symbolic Regression (generalized additive models picked using genetic algorithms) is pretty cool too, and it’s in my org-emacs TODO lists to look at this more. Lots of good unsupervised techniques such as Kohonen Self Organizing Maps have fallen by the wayside. They’re still useful: I use them. I’d love a book or review article which concentrates on the topic, or just provides a bestiary of things which are broadly unsupervised. I suppose Oliver Chapelle’s book is an OK start for semi-supervised ideas, but again, not real unified or complete.

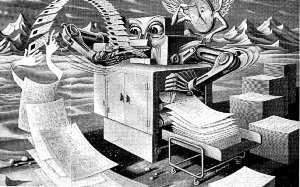

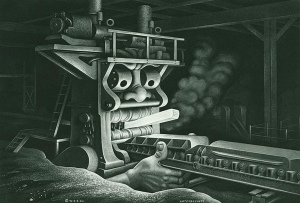

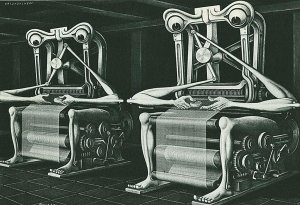

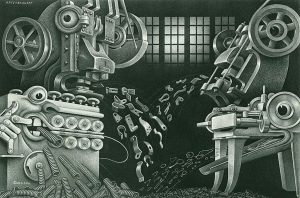

Images by one of my heroes, the Ukrainian-American artist Boris Artzybasheff. You can find more of it here.

Interesting list of things. One small issue: Latent Dirichlet Allocation can’t be run on a tf-idf representation of documents. It’s only defined for the raw word counts version of the data.

Thanks for the correction; 3 hours of sleep, and I was confusing what I was doing with the topicmodels package, which is the LDA implementation I was working with. td-idf was used at some stage in the analysis, but I’m too tired to go remember how it figured in; probably part of the preprocessing to establish what goes into the bag of words.

It’s certainly the case that preprocessing is pretty important to get useful results out of LDA, like pruning stopwords or highly frequent words from the vocabulary. tf-idf could be used for this…

Looking at my old work now that I am legally sane again, I see, in fact, I was using tdidf to prune out low tdidf scores and frequency. Thanks for the correction; I’ll link it in the post.

Also; have fun at Zoo Mass Lederle; I spent many hours screwing around with computers on a VT100 terminal there back in the day.

I did not get much from the Vovk, et al. book on conformal prediction, since the techniques still seem to rely on a lot of state (i.e. memory) hanging around. Maybe I should give it another read.

The book gave me a serious headache: I don’t know if I actually got anything out of it, and wouldn’t care about this topic at all if it were my only exposure.

The working papers on their website are better. Considering our mutual taste for instance learning, maybe “Regression Conformal Prediction with Nearest Neighbours” by Papadapoulis, Vovk and Gammerman. As a practical paper, I thought it was most comprehensible.

[…] Neglected Machine Learning Ideas — Perhaps my list is a “send me review articles and book suggestions” cry for help, but perhaps it is useful to others as an overview of neat things. […]

[…] Neglected Machine Learning Ideas — Perhaps my list is a “send me review articles and book suggestions” cry for help, but perhaps it is useful to others as an overview of neat things. […]

I tried to address the notion of online learners in a paper for a ML class years ago with a paper called “On the Job Training”. Tried to publish it, but it was rejected.

Whoops, forgot the link: http://lunkwill.org/cv/ojt.pdf

Reminds me a bit of the Cesa-Bianchi/Lugosi guys.

[…] To read the complete article, click here. […]

This is a great list of topics, thanks for posting. I’m looking forward to reading the post in more detail.

I think that Bishop might already cover this, but most machine learning books/software packages neglect to deal with nested/hierarchical sampling designs, which are very common in the sciences and probably in most other applications (e.g. customers within a restaurant and restaurants within a city: you could try to estimate a coefficient for each entity, but it will often make more sense to put a probability distribution over their possible values).

As a scientist, I often find myself being forced to choose between:

* a method that respects the structure of my data but assumes linearity (e.g. generalized linear mixed models)

* a more flexible method that ignores the structure of the data (e.g. trees, svm, neural nets)

* Designing my own software to handle the problem.

There are a few exceptions (I think there is decent off-the-shelf software for fitting generalized additive mixed models), but I’d love to have more options.

[cross-posted from the Reddit thread: http://www.reddit.com/r/MachineLearning/comments/2bp5na/neglected_machine_learning_ideas/%5D

When you mention ‘Norvig’s chapter” on RL, do you mean in Russell & Norvig’s AIMA? It seems odd to give one author credit for a chapter of a 2-author book, and anyway I would have assumed Stuart wrote most of that chapter, not Peter, based on their publication histories.

Hi Scott,

interesting topics (as always).

One question: do you think machine learning will (or should) play a bigger role in IT security (malware detection, intrusion detection)?

Regards

Maggette

I dunno, considering how badly they do with simple stuff like spam filters doesn’t inspire a lot of confidence. I had thought about using unsupervised learning on logfiles though.

I wouldn’t say spam filters are easy. There is so much money in fraud, that there is real motivation to make spammy emails work. Classifying something is hard if that thing is changing specifically to avoid your classifier.

I have nothing to add except “thank you”. But I have to say it anyway, because folks who post cool content like this for free on their blogs deserve to know that total strangers appreciate it. 🙂

Same here. Thanks.

“The machine learning literature is vast, techniques are bewilderingly diverse, multidisciplinary and seemingly unrelated.”

Totally agree. In view of this reality, I have to be really sceptical about the so called point and click machine learning platforms that offer limited solutions based on some popular algorithms.

Those are pretty silly, indeed, as I am sure you’ve found in your professional work.

CCW is a cool technique; nice to know I’m not the only partisan of instance learning. BTW, you know you can get n log n on NN searches if you stick the data in a tree, right? One of these days, I intend to build distributed KNN for the J language.

You ever go to Tony Tran’s ML meets?

Yes I agree. I was doing brute force O(n x n) on Hadoop for KNN.

fortunately online learning is used at our work

Is your company hiring?

Overall a really good article. I would just like to mention a few things:

I feel like to be good at “data” you really have to explore a bit of everything. The way statisticians think of things is different than ML people, which is different than robotics/AI people, etc. But all have some good qualities. I also think that the big folly is that these people don’t talk to each other enough. Outside of some basic probability theory, the stuff I learned in stats , ML, AI, and probabilistic modeling where all fairly different. That being said, you did leave out some cool things:

time series- there is a huge amount of research in this field in statistics. There is lots of cool stuff in anomaly detection, functional time series (extremely cool!) , change point analysis, panel data, etc.

conformal prediction- again, this is fairly big in statistics. We don’t call it that, but for the most part people don’t use methods unless they can say something about the errors (usually that whatever estimator is consistant). A lot of cool stuff starts with Effron, but basically the bootstrap is probably the most powerful technique to come out of modern day statistics, and it can be used to get estimate what kind of errors you are looking at.

Click to access efron1982_tech_rep.pdf

Regression is also flushed out to a much larger extent in stats, and the focus is more towards how/why it works and how to utilize it well for general problems.

Finally, I would also like to mention real analysis/ measure theory. These aren’t techniques per say, but they are the core theory behind the ideas you are trying to achieve (convergence in distribution, metrics spaces, measure spaces, functional space, etc). I feel like if most people read a bit about this they would have a much much better understanding of what exactly their methods are trying to do.

Thanks for a thoughtful post, O Tim, who can conjure fire without flint nor tinder:

I saw a vague hand wavey thing about how measure theory applies to statistical problems 20 years ago in a Hilbert Space class: can you recommend a decent book?

See, bootstraps do get used in batch learning, just like they are used in stats: it’s the online part which makes conformal prediction different. During some sections of a time series, your error may be low, and you may only be able to figure that out online: that’s important information you’d be leaving on the table otherwise. At least that is my understanding of the importance of conformal prediction. Like I said: the book and most of the articles gave me a headache.

I don’t think statisticians can do much with timeseries with really pathological characteristics and unknown generating functions, like, say, Futures prices or electrical load demand. I’m not sure what statisticians can do even in the presence of irregular time series (I should probably consult Tsay to find out, but I’ll admit my ignorance here). ML techniques can really shine in both situations. I know this, because I’ve been paid to kick the crap out of someone else’s ML gizmo, which in turn is much better than what one can do with classical statistical techniques. The ML literature should have more material on how to do this, IMO. FWIIW, I have coded up what I think is a “functional time series” doodad for playing in the forward curve of oil futures. It is pretty cool.

I think the big thing is that once you get to a level of math where you are talking about L2 and Hilbert Spaces, you’re at the level where your random variables are measurable functions (sure you could talk about L2 in a Riemann sense, but why bother. I’m sure a lot of really cool results wouldn’t hold since you lose meaningful stuff like dominated convergence, and pathological stuff like measure zero sets can really hurt you.) [ These guys all mention good points, especially Rosenblatt http://www.quora.com/How-important-is-Measure-Theory-to-Statistics ] For good books, I would just look at measure based probability books. The one we used was written by a professor at our school, so I bet you could find better :p .

For Hilbert Spaces, it depends a lot on the statistics you do. At my university we did a lot of functional time series, in which case you are talking about L2 observations, and then you need a LLN and CLT on Hilbert Spaces. As for good books, I can’t really think of any good ones off the top of my head. At that point though, the books are mostly collections of papers, but this author is a good place to start http://www.researchgate.net/profile/Lajos_Horvath/publications

They do a large amount of online stuff in stats. It usually manifests itself in change detection, but usually it assumes you have some corpus of data to train off of and then it fires when it thinks we have enough information to tell that the distribution changed. These methods actually deal with pathological cases decently, and typically most of the examples you see in papers are financial examples. However the best example of online learning I’ve seen is this

https://www.youtube.com/watch?v=4y43qwS8fl4 (demo is at roughly 38 minutes, but the entire talks is extremely cool. His methods are basically “general intelligence” methods where he tries to replicate a brain)

But yeah in general online learning is hard, but I think that the statistics, AI, and [especially] robotics literature would be a lot more rich in these fields, since it seems like ML is usually traditionally thought of to do offline stuff.

Also I forgot to mention my favorite reference books

http://books.google.com/books/about/Asymptotic_Theory_of_Statistics_and_Prob.html?id=9ByccYe5aI4C

Regarding online learning, see Mohri et al.’s “Foundations of Machine Learning”. It takes a learning theory approach to many popular algorithms, including discussion of regression for online applications.

For reinforcement learning I recommend Wiering and van Otterlo’s “Reinforcement Learning: State-of-the-Art”. Some very straightforward discussions in that text.

Those look pretty good, thanks!

Are you reading through the proceedings of the many machine learning and theoretical computer science conferences that accept papers on (most of) these topics? Because things like online learning and feature “engineering” were in almost every learning theory and data mining conference this year. And “ML in the presence of lots of noise” is a central topic in computational learning theory (for example, the complexity of learning parities with noise is a HUGE open problem). There is a vast literature on this stuff, but it sounds to me like you’re looking in the wrong places.

As another example related to another comment of yours about time series, there was a recent paper on “High-Confidence Predictions under Adversarial Uncertainty” (http://arxiv.org/abs/1101.4446), which combines online learning with time series under arbitrary conditions. These kinds of things are the bread and butter of theoretical computer science!

These things aren’t in books mostly because there is little to no incentive for researchers to write books. But I don’t believe you when you say these topics are neglected, and I see new papers monthly on many of these topics.

People publish new papers all the time. So what? People have been publishing on these subjects for decades, and nobody knows how to use them, or even what is useful.

I know many productive practitioners who have never heard of or used any of the techniques mentioned above, or has never heard of or used any of the techniques developed over the last 40 years of research on, for example, timeseries. It isn’t for lack of conference papers. It’s because nobody has bothered to systematize these ideas and write books or teach courses about them. Hell, half the money techniques I know about are from the 70s and 80s. Number of books who mention them: 0. FWIIW, every practitioner I have ever spoken to, including those who have never heard of any of the above, is interested in “deep learning.” While this is an important subject it is certainly not as neglected as the things I mentioned above.

So by neglected you don’t mean that nobody works on these problems or knows methods and solutions. You mean that the broader world of practitioners don’t have a lot of literature on them.

So why don’t industry people put together some funding for the experts in these fields to write the kinds of books you want? Researchers have no incentive to write books, so you could work to make one.

Apparently you didn’t read the first two paragraphs of my post: it was inspired by some questionable advice from a late term grad student who was trying to help people “level up their machine learning.” As I said, the advice “read these books, then think about deep learning when you are a ninja” was not very good, as it doesn’t give the reader any sense of what is out there.

There’s also the matter that the things being spoken about in conferences are often rehashes or completely ignorant of things spoken about in conferences 40 years ago, because *nobody is writing this shit down and making sense of it.* Once you’ve been around long enough to actually examine the literature in some depth, you might realize this yourself. FWIIW, this is absolutely not the case in my old field of physics, which actually is agglomerative, so it’s rather shocking to encounter it.

Googling online learning: https://www.google.es/search?q=%22online+learning%22+%22machine+learning%22+-mooc&ie=utf-8&oe=utf-8&aq=t&rls=org.mozilla:es-ES:official&client=firefox-a&channel=sb&gfe_rd=cr&ei=RJPgU6K5INLH8gfFwoKABA

Algorithms for Reinforcement Learning available free http://www.ualberta.ca/~szepesva/papers/RLAlgsInMDPs.pdf

“The machine learning literature is vast, techniques are bewilderingly diverse, multidisciplinary and seemingly unrelated. It is extremely difficult to know what is important and useful.”

My god, yes. As a software engineer by trade, I’m finding it very hard to find a good signal among the noise (maybe ML could help me with this :D). It’s as if one were just learning programming and there were thousands of books, but all along the lines of “Write your first website with PHP and MySQL!” XOR “Understanding Hadoop Internals”.

I have taken some online courses on Probability and Statistics that were quite interesting and gave me some decent mathematical understanding of the basic concepts, but using them to find even a broad type of algorithm I might employ to solve a specific problem (much less understanding *that* algorithm enough to apply it successfully) has involved much stabbing in the dark. Another book that explains k-means clustering is about as useless as an obscure, context-less research paper.

I have ordered the Flach book as well as “Foundations of Machine Learning” mentioned above; we’ll see how those go.

I would like to have an overview of methods that are proven to be equivalent. But that really requires someone who knows the methods in depth. So perhaps it is something that just cannot be done…

For example:

* the close connection between belief propagation (or sum-product message passing) in AI, the Viterbi algorithm in dynamic programming, and the Bethe/Kikuchi approximation in statistical physics.

* the equivalence between stochastic optimal control with Hamilton-Jacobi-Bellman equations and approximate dynamic programming

* equivalence of Tikhonov regularization, ridge regression, Phillips-Twomey method, and related to Levenberg–Marquardt

* relation between principle component analysis and k-means clustering

* the different kernels for matrix factorization each resulting in different methods: singular value decomposition, nonnegative matrix factorization, probabilistic latent semantic indexing, etc.

I think skipping most of the “fancy” new algorithms and sticking to a few principles much be something a new PhD student would most profit from. To these principles I would count:

* convexity

* nonnegativity

* sparsity

* submodularity

* margins

If you think about your problem on this abstract level, it might be much easier to come across algorithms in different disciplines than your own. 🙂

[…] Neglected machine learning ideas – This post is inspired by the “metacademy” suggestions for “leveling up your machine learning.” They make some halfway decent suggestions for beginners. The… […]

Udacity has relased a course on Reinforcement Learning: https://www.udacity.com/course/ud820. I can’t give an opinion about it, though (didn’t take the course and not expert on the field).

I took the Udacity course. It is a good broad overview and the instructors are amazing. The course is everything but boring and I amply recommend it. Unfortunately, it is very short and shallow. It focuses on Markov Theory, Q Learning and Game Theory. Therefore, it can only be seen as an introduction. The instructors recommended me to read this paper as a sensible next step: http://www.cs.cmu.edu/afs/cs/project/jair/pub/volume4/kaelbling96a.pdf

I’ve been doing a lot of compression-based ML for about the last five years. You could not be more correct regarding its neglect, especially considering some of the optimality proofs around the area. I often wonder if the ‘deep learning’ (read: hierarchical lossy compression) researchers ever consider it.

Hey Rob: Thanks for the comment. It is in part the optimality proofs that blow me away about these techniques, though their great beauty and deep roots in information theory also melted my brain. I have a number of colleagues on the applied side of things who are very well versed in the latest gradient boosting tricks and the advances in DL, but who have never even heard vague rumors of these.

I guess one thing which keeps them from popular use is the fact that their native type is symbols rather than something that people ordinarily think of as data (aka floats or ints). Since most people work with floats and ints, these ideas involve some ad-hoc discretization at the front end. As you probably know, this can look peculiar on panel data.

FWIIW, I attended a Deep Learning seminar by Schmidhuber a few weeks ago. He genuflects at the god of compression for sure, but was of course a dedicated connectionist. I confess his results have distracted me; I’ve been mucking about with neurons since then.

Do you have any favorite papers or libraries you recommend, or are you doing it all custom?

[…] on such data, but it wouldn’t do a thing without td-idf. [correction to this statement from Brendan below] Similarly, image processing has a bewildering variety of feature extraction algorithms which are […]

Re: Time series oriented techniques in general

Maybe TS guys are busy raking in the lindens (dosh?) to answer what machine learning methods do TS practitioners use on a day-to-day basis?

Also, could you describe or point to some typical nonlinear time series problems in industry?

[…] Link to Scott Locklin’s Blog: Neglected machine learning ideas […]

[…] Link to Scott Locklin’s Blog: Neglected machine learning ideas […]

[…] on such data, but it wouldn’t do a thing without td-idf. [correction to this statement from Brendan below] Similarly, image processing has a bewildering variety of feature extraction algorithms which are […]

Scott, have you heard of Spyros Makridakis? This gentleman is conducting forecasting tournaments once every few years, and after the latest one, has come to the conclusion that pure ML (whatever he means by that), underperform pure stats, and the best approaches are “hybrid” stat-ML. Thoughts on this? and will you participate in the next M competition (that’s what they’re called)?

I wasn’t aware of his competitions, but I have one of his books. Most people have no idea how to apply ML to timeseries, which is his field of expertise. Looking at his data sets, they’re all extremely small (here are 13 points, predict the 14th), so only someone who was very expert in ML approaches to timeseries could put a dent in them. Pretty worthless contest to generalize from.

[…] my shock and amazement, conformal prediction has been going bonkers since I mentioned it; thousands of papers a year instead of a few hundred from a smallish clique of […]